Why Gen AI Adoption Fails in Organizations

Introduction

Most organizations today sit on the wrong side of the GenAI Divide: adoption is high, but disruption is low. Generic tools like ChatGPT see widespread use. Yet, despite heavy investment and a flood of pilots, only a small fraction of firms are moving beyond experimentation to meaningful transformation, citing integration challenges and poor workflow fit etc.

An MIT analysis of 300 public implementations revealed that only a few industries were seeing deep structural change. It also found that large firms were leading in pilots but were lagging scaling, and that investment was skewed towards visible, top-line functions over high-ROI back office, indicating a lack of strategic application.

The barriers to scaling AI across enterprises, garnered from multiple reports, are aligned with the findings of my thesis:

weak leadership alignment,

poor data quality,

underinvestment in infrastructure,

tech-first rather than problem-first approaches,

attempts to apply immature solutions, and

human challenges

That being said, early adopters that cross the divide are beginning to see impact: selective workforce efficiencies, reduced outsourcing spend, improved customer retention, and higher sales conversion. These examples show that when applied strategically, learning-capable systems can deliver real value.

6 Barriers to AI Scaling

Let's take a deeper dive and look at the barriers to scaling in more detail. You'll note that barriers below are mostly related to organizational challenges rather than purely technical limitations. So, a positive take on this, is that even if you don't have a raft of technical talent, it doesn't mean you can't succeed with scaling AI implementation.

1.Leadership-Driven Failures

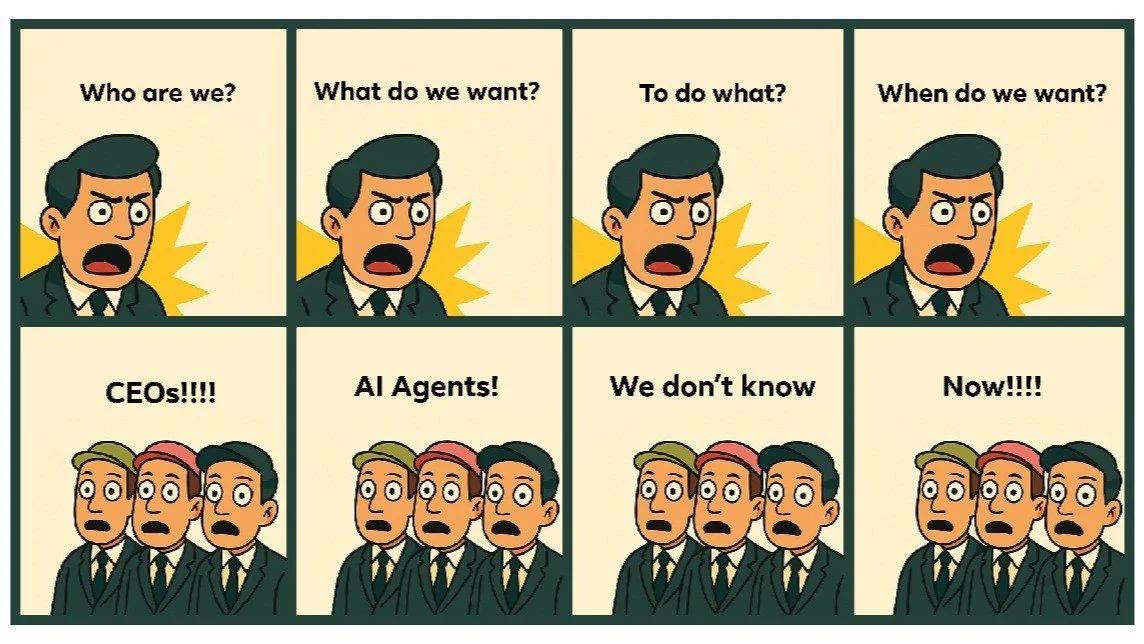

We've all seen the image below in our LinkedIn feeds and chuckled.

AI, but no ROI

The AI transformation graveyard is littered with ambitious AI projects doomed by leadership’s fundamental misunderstandings, from misdiagnosing problems, misaligned objectives, forced solutions, or impossible expectations.

While executives chase the promise of AI, they blindly deploy technologies without strategic clarity, turning potentially revolutionary tools into expensive corporate ornaments that solve nothing. So, it's of no surprise that 84% of interviewees from the same MIT survey cited leadership failures as the primary cause of project failure.

This often stems from a lack of clarity about the intended business problem and the metrics for success. For example, a business leader might request an algorithm to predict product pricing when optimizing profit margins is needed. This disconnect between business needs and technical execution leads to the development of solutions that fail to address the core problem.

AI hype can inflate expectations about its capabilities and timelines. Business leaders may have unrealistic expectations, expecting quick wins, or may fail to appreciate the time-intensive nature of data acquisition, cleaning, and model training.

The AI landscape is moving faster than most people can keep up. A lack of sustained commitment, or the tendency to chase shiny objects, leading to frequent shifts in priorities can result in AI projects being abandoned prematurely, preventing them from delivering tangible results. Leaders must commit to long-term project timelines, ideally at least one year, for meaningful outcomes, which means the need to apply a strategic lens.

2. Data-Driven Failures

Effective AI models require large volumes of high-quality data. However, many organizations still struggle with data quality issues stemming from inadequate investment in data infrastructure and a shortage of skilled data engineers. Legacy data collected for compliance or logging purposes might lack the necessary context or granularity for AI training, and these imbalanced datasets can then lead to biased models.

3. Underinvestment in Infrastructure

Building and deploying AI models requires robust infrastructure for data management, model training, and deployment. Underinvestment in this area leads to longer development cycles, higher failure rates, and a reduced ability to leverage data assets effectively. To address this issue, it's critical to develop an AI architecture framework that supports modularity, scalability, flexibility and reusability. Or alternatively develop external partnerships to be able to leverage the infrastructure you need drive adoption quickly, with clear accountability built in at the start

4. Focus on Technology Over Problem-Solving

Technical teams and technophiles can sometimes be drawn to the latest AI trends and shiny objects, where their passion leads them to the implementation of complex solutions when simpler ones would suffice. A laser focus on solving the business problem with a defined ROI, rather than chasing technological novelty, is crucial for successful AI implementation.

5. Immature Technologies

While AI is rapidly advancing, specific problems remain beyond its current capabilities. Attempting to apply AI to solve such problems will inevitably fail. Leaders need to understand AI’s limitations and select projects that are a good fit for the technology’s current state of development.

We recently saw the CEO of Klarna cut 700 employees citing heavy investment in AI for customer service instead, only to reverse the decision and hire back the humans to bring it’s customer service levels back up, because the technology was not yet mature enough. That being said, the technology is improving at a phenomenal rate, and will likely lead to job losses in the future, which will need to be handled in a timely and sensitive way.

6. Human Considerations

The GenAI divide is not only technical, it's also human. Employees often prefer consumer tools like ChatGPT because they are faster, easier, and more intuitive than enterprise systems, even when powered by similar models. This usability gap helps explain why low-cost tools often see higher adoption than costly bespoke solutions. To close the usability gap, enterprise tools must match consumer-grade UX, otherwise adoption will stall.

In addition to usability, there are 3 other human factors shape success of AI initiatives:

Talent: A scarcity of AI professionals makes training and upskilling existing staff challenging, yet essential

Culture: Fear of replacement and low trust in AI outputs stalls adoption, while learning cultures accelerate it.

Agility: Iterative AI projects require flexible, cross-functional collaboration, not rigid, traditional methods. And so silo'd organizations will naturally struggle.

Ultimately, bridging the divide depends as much on user trust and organizational culture as it does on infrastructure or algorithms.

Conclusion

Crossing the GenAI Divide is about more than chasing the next shiny tool, it is about disciplined leadership, strategic clarity, and a willingness to rewire how the organization learns and adapts.

The evidence is clear: pilots fail not because AI lacks potential, but because enterprises fail to align leadership priorities, invest in data and infrastructure, and foster a culture that trusts and embraces change.

For executives, the mandate is straightforward:

Lead with strategy, not technology. Define the business problems first, then select the right AI applications to solve them.

Invest where it matters. Data quality, infrastructure, and governance may not be glamorous, but they are the foundations of scalable value. And focus on ROI, ROI, ROI.

Build adaptive cultures. Equip teams with the skills, trust, and flexibility to experiment and learn.

Commit for the long term. Transformation rarely happens in a single quarter, it requires sustained focus and patience.

The organizations already seeing measurable gains prove that AI can deliver efficiencies, new revenue streams, and stronger customer outcomes. But the difference between a graveyard of pilots and real transformation lies in leadership choices. Executives who combine vision with execution discipline will not just adopt AI > they will reshape their industries.